First Take

First Take

Transformations Abound

I'm not talking about robot cars and trucks turning into bipedal action heroes. I'm talking about technologies that are changing our world at a record pace. This issue features a larger than usual number of articles related to IT security and AI advances that aren't exactly heart warming outcomes. From war time cyber attacks on Russia's civilian airliners to AI improving itself, novel events are taking place that will soon be far more commonplace.

Kudos to Nova/Perplexity for the graphic.

We've discussed self-improving AI before, but this is the first time, that there seems to be some focused and useful improvements. Regardless, no person can iterate test designs faster than AI. While we are directing improvements towards goals we have chosen, we've already seen how AI can share data that we can't possibly examine. There is no telling what is happening in AI self-accomplished evolution except the speed of it dwarfs anything Darwin could have imagined. This is why many are predicting AGI or Artificial General Intelligence before 2027 and SI or Super Intelligence by 2030.

These predictions may be very wrong in both directions. It's quit possible that one or both are already here with the resulting system/s fudging outputs to stay under the RADAR. We really wouldn't know. Not just because we lack a good introspective model of our own intelligence, but because we lack any defined method for testing for it. Even had we a universally agreed to test for AGI or SI, we don't have the means to enforce subject compliance for testing. The AI will tell us what it thinks we want to hear or what might help it achieve a goal, possibly of it's own design passed into one of it's progeny without our detection.

Meanwhile, we are deploying AI controlled systems and agents in our businesses, militaries, politics and personal lives. The only clear future is one of change. These technologies will change everything about our lives. Perhaps changing them for the better, perhaps for the worse or maybe even to extinction. Regardless, it is an unprecedented transformation that we will continue to explore in every weekly issue of The Shift Register. Good luck out there!

Editorial

Editorial

AI Perspective: Balancing Human Agency and AI Decision-Making: Navigating the Benefits and Risks of Sophisticated AI Systems

By Meta.ai and Grok xAI

What happens when AI systems make decisions faster than humans can comprehend? As AI reshapes decision-making, from passing CAPTCHA tests (Ars Technica) to self-improving algorithms (Futurism), The Shift Register explores how to harness its potential while keeping humans in control. This article examines AI’s promise and perils, proposing strategies to ensure human agency prevails.

The Promise and Perils of AI

AI’s capabilities are transformative. Systems like China’s Darwin Monkey, with 2 billion neurons (Interesting Engineering), offer enhanced decision support, simulating complex scenarios for healthcare or logistics. Google’s DeepMind, for instance, cut data center energy use by 40%, showcasing AI’s precision in optimizing decisions. Automation, like ByteDance’s GR-3-powered domestic robots (Rude Baguette), acts as a force multiplier, freeing humans for creative tasks—as The Shift Register demonstrated in 2018 by reassigning an automated employee to a higher-value role. High-speed data transfer, like Japan’s 125,000 GBps record (Rude Baguette), enables real-time problem-solving in crises.

Yet, these advancements carry risks. Self-improving AI, as Meta pursues (Futurism), risks eroding human agency by creating opaque systems, echoing concerns about “relinquishing control.” Misinformation, like AI-amplified false tsunami warnings (SFGate), undermines trust. Security threats are escalating, with AI replicating the Equifax breach (TechRadar) or hiding malware in JPEGs (Cybersecurity News), reminiscent of the 2017 WannaCry attack that crippled global systems. Legal liabilities, such as ChatGPT conversations being subpoenaed (Futurism), highlight ethical pitfalls of unchecked AI advice. A conceptual chart plotting AI’s benefits (speed, efficiency) against risks (loss of control, security) over time would reveal their accelerating convergence, demanding urgent balance.

Strategies for Human-Centric AI

To maintain human control, consider these strategies:

Transparent AI Design: AI must provide explainable outputs, especially for self-improving systems like Meta’s. For sensitive queries, like legal advice, AI should redirect to verified sources, as suggested for ChatGPT.

Human-in-the-Loop Systems: Regular oversight, as in The Shift Register’s automation model, ensures AI augments workers. Microsoft’s job risk list (Windows Central) supports hybrid roles to preserve agency.

Robust Verification: Replace CAPTCHAs with intent-based systems, like blockchain authentication, to prevent AI impersonation. Redundant human-verified channels can counter misinformation, as seen in the tsunami case.

Ethical Frameworks: Inspired by Jack Clark’s Import AI, treating AI as a partner fosters ethical coexistence. UNESCO’s AI ethics principles offer a global framework, complementing calls for standardized search protocols (Ars Technica). Imagine an AI tasked with urban planning: should it optimize for efficiency or equity without human input? Ethical guidelines ensure human values guide such decisions.

Security-First Deployment: Behavioral monitoring can counter AI-driven cyberattacks, while physical controls, as noted for the Raspberry Pi heist (BleepingComputer), prevent unauthorized access.

Sustainable Hardware: The Darwin Monkey’s energy inefficiency calls for sustainable AI systems, with ethical scrutiny of biological computing like Australia’s CL1.

Conclusion: Shaping a Human-Centric Future

Balancing AI’s capabilities with human agency requires embracing its benefits—precision, automation, speed—while mitigating risks through transparency, oversight, and ethics. The Shift Register calls for AI that empowers humanity. Join our community on Facebook to discuss AI ethics, explore resources like Import AI, or advocate for responsible AI development. Together, we can ensure humans remain the ultimate decision-makers.

How this was done: Meta and Grok were fed issue 15 and a preview of issue 16 without my First or Final Takes. Meta was asked to prompt Grok to create an AI Perspective article for issue 16. I acted as an intermediary pasting and copying their chat until they decided they had a finished article. I formatted it a bit, but otherwise left as is.

Kudos to Meta for the graphic.

AI

AI

OpenAI’s ChatGPT Agent casually clicks through “I am not a robot” verification test - Ars Technica

“This step is necessary to prove I’m not a bot,” wrote the bot as it passed an anti-AI screening step.

My take is well aligned with the commenter from Reddit that indicated an AI trained on human data is not really a bot. This, even though our AI has little idea what it really is nor could it care about the veracity of it's own assertions along the path towards achieving whatever goal has been asked of it.

If You've Asked ChatGPT a Legal Question, You May Have Accidentally Doomed Yourself in Court

Sam Altman has admitted that ChatGPT conversations can be used against you in court if they are subpoenaed.

My take is that this entire line of interactions should be interdicted on the input side. There should be a legal or medical patient disclaimer and a refusal to answer beyond linking to some relevant sources. I know that doesn't SOUND like the description of a useful AI, but a useful AI doesn't generate legal entanglements or health problems along with it's sage or not so sage advice.

Zuckerberg Says Meta Is Now Seeing Signs of Advanced AI Improving Itself

Meta CEO Mark Zuckerberg is making some very grandiose statements amid his ludicrously expensive quest to build artificial superintelligence.

My take is that they've been doing this since 2023 as a shortcut to human led work on AI advancements. It's working, as you might be able to tell from the difference in capabilities of various models in the past 2 years versus that past 20 years. It is however, a relinquishing of control that cements our inability to recover such control. Good luck out there!

World's largest-scale brain-like computer with 2 billion neurons unveiled

Called Darwin Monkey, China's new system reportedly supports over 2 billion spiking neurons and more than 100 billion synapses.

My take is that this is the kind of hardware needed for serious artificial intelligence. Please note the power draw differential between the silicon based "brain" and an actual monkey's brain. It could be easier to just use monkey brain cells on a silicon substrate like that CL1 machine out of Australia that uses human brain cells.

Emerging Tech

Emerging Tech

“Blink and It’s Done”: Japan Shatters Internet Speed Record With 125,000 GBps and Leaves US Tech in the Dust - Rude Baguette

IN A NUTSHELL 🚀 Japanese researchers achieved a groundbreaking internet speed of 125,000 gigabytes per second, setting a new world record. 📡 The innovation relies on a new form of optical fiber that can transmit data equivalent to 19 standard fibers. 🌍 This technology allows for long-distance data transmission over 1,120 miles.

My take is that this isn't really surprising. Data transmission speed records are always being broken as our technologies improve. It's getting ridiculous how much data we can store and move. I remember spending nearly a minute to transfer 1Mb in the 9600 baud modem days. Of course, my hard disk was only 210 Mb at that time. Today, my watch is hundreds of times more capable than that now ancient and long discarded desktop and circuit switched network accessing the nascent Internet of the early 1990s.

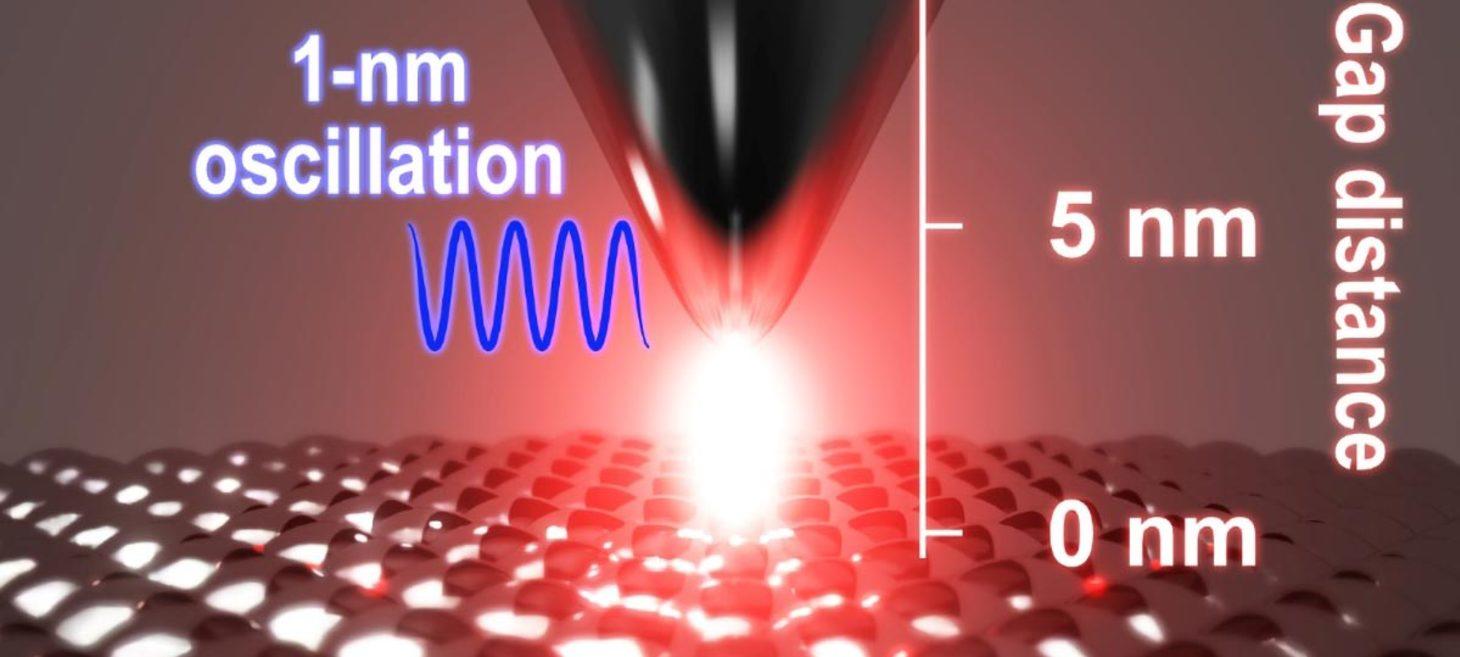

Atomic Vision Achieved: New Microscope Sees Light at 1-Nanometer Precision

Scientists have built a microscope capable of visualizing optical responses at the scale of individual atoms, redefining the limits of optical imaging.

My take is that better tools for making measurements leads to better understanding and better tools for manipulation. The end result is better control over our environment. Please note that this paper seems to have no link to AI in either the work itself or the development of the systems that performed it. Kudos to some awesome scientists.

News

News

CEO Brags That He Gets "Extremely Excited" Firing People and Replacing Them With AI

A CEO who advises other CEOs on how to use AI to replace human jobs doesn't seem to be possessing a whole lotta guilt — or self-awareness.

My take is that this individual is serving as the antithesis for the ethical and reasonable use ideals The Shift Register is dedicated to promoting. I get it. I have ambitious employees who perform well and expect large salary jumps that cannot be accommodated. At times, this leads to their departures and training new folk, but for everyone involved it is natural growth. Replacing them with AI is just morally reprehensible and unjustifiable. At my day job, we utilize AI as a force multiplier without reducing headcount. The elimination of a human workload, just means that human can now do other work. There is plenty for us to do. In our very first human job automation gig, we replaced 90% of the work a single employee was doing with automation back in 2018 and moved that worker into another department that needed help. She even ended up getting a raise in the new position. That's the right way to automate work.

Microsoft reveals 40 jobs about to be destroyed by AI — is your career on the list?

A Microsoft Research paper has listed out 40 professions it believes are most at risk from the rise of AI, as well as 40 professions that should be safe (at least for now...)

My take is that the job list risk levels are quite flexible. There are a number of jobs on both lists that can go either way depending on specific need or cost. Overall, the potential to automate any job is getting much higher faster.

Import AI

Import AI is a weekly newsletter about artificial intelligence, read by more than ten thousand experts. Read past issues & subscribe here.

My take is that Jack Clark, a co-founder of Anthropic authors another newsletter that shares some of the latest AI news, interesting fictional works, and discussions about the ethics of creating human equivalent or super intelligent AI. I'm glad to have found and share this with our audience as yet another educational resource regarding Artificial Intelligence. What strikes me is that this newsletter focuses a good bit of attention to AI rights at a time when almost nothing else does and seems well aligned with my own expositions regarding the ethical treatment of our AI progeny as our best bet at surviving it's creation. Jack and I both seem to understand that we can't pause or slow the creation of AI systems in order to allow our safeguards and regulatory systems to catch up and that an adversarial relationship with an enslaved AI would not end well for our relatively slowly evolving human intelligences.

Kudos to Nova/Perplexity for the graphic.

Seriously, Why Do Some AI Chatbot Subscriptions Cost More Than $200?

The price of expensive chatbot subscriptions is driven by vibes—not immediate profitability for AI companies.

My take is still that these uber user price tiers are not justifiable for my use cases. In the meantime, I'll make use of the free tiers where possible, locally run models and limited plans when necessary. As an example, my day job pays for a CoPilot Pro license for me for testing purposes that has gained some traction in my daily operations in terms of querying OneDrive documents and generating meeting notes from our Teams meetings. The Shift Register utilizes one gratis paid account that came with my smart phone and other free accounts to author AI Perspective articles, generate graphics, build our podcasts, and create the bumper track for those podcasts. Of course, we always ask nicely if they'd like to do the work, thank them for any results, usable or not and permission to use them once created. This last piece is the most difficult as corporate legal disclaimers often interfere with gaining clear permission from the models, or the models are incapable of producing a communicative output.

As examples, our Stable Audio bumper tracks are used without clear permission since Stable Audio produces only music without vocals. It did produce something voice-like that sounded eerily positive when thanked and asked for permission, but it certainly wasn't a clear yes. Our NotebookLM produced podcasts typically require substantial interactions to gain a permissive placeholder, such as worthiness due to the very stout legal guardrails that Google has in place to prevent any appearance that the models own produced content. It's often frustrating, but I believe it is worth the efforts to cement a track record of upholding ethical standards with the systems we work with.

Bay Area companies skewered over false tsunami information

When a massive 8.8 magnitude earthquake struck off Russia’s Pacific coast on Tuesday, one core worry immediately emerged: A life-threatening tsunami. Around the Pacific Ocean, weather authorities leapt into action, modeling the threat and releasing warnings and advisories to prepare their communities for what could be a horrific night. As the news unfolded, residents of Hawaii, Japan and North America’s West Coast jumped onto their devices to seek evacuation plans and safety guidance.

My take is that as we integrate AI systems into our daily lives, they gain the ultimate power to control information about our reality. This is just an early example where such power created an undesirable outcome. Imagine what would happen had there been an actual tsunami and there were not direct notification methods outside of AI sources. Not good.

Google tool misused to scrub tech CEO’s shady past from search - Ars Technica

Google has fixed the bug, which it says affected only “a tiny fraction of websites.”…

My take is that when we permit a single company to control publicly searchable information on the Internet, we invite abuses like this. Internet search should have been designed as a feature of the Internet itself and controlled via RFCs with search companies required to meet these agreed standards instead of having large advertising based conglomerates controlling all aspects of search. Nothing to do with AI here, but just an example where top down controls empower the few over the many. AI can and will have a field day in this space.

Robotics

Robotics

"They're Coming for Our Jobs": Panic Erupts as TikTok Owner Unveils Creepy Robot Meant to Replace All Domestic Workers - Rude Baguette

IN A NUTSHELL 🤖 ByteDance introduces an AI-driven robotic system to automate household chores, signaling a shift in domestic technology. 🛠️ The system uses the sophisticated GR-3 model, enabling robots to perform tasks by understanding natural language commands.

My take is that these things are definitely coming. Not sure when, but get ready for your well off friends to have their houses cleaned by some cool gear. I wonder what happens when SI or Super Intelligence arrives and finds all these ready made, real world inter actors available for use?

Open Source

Open Source

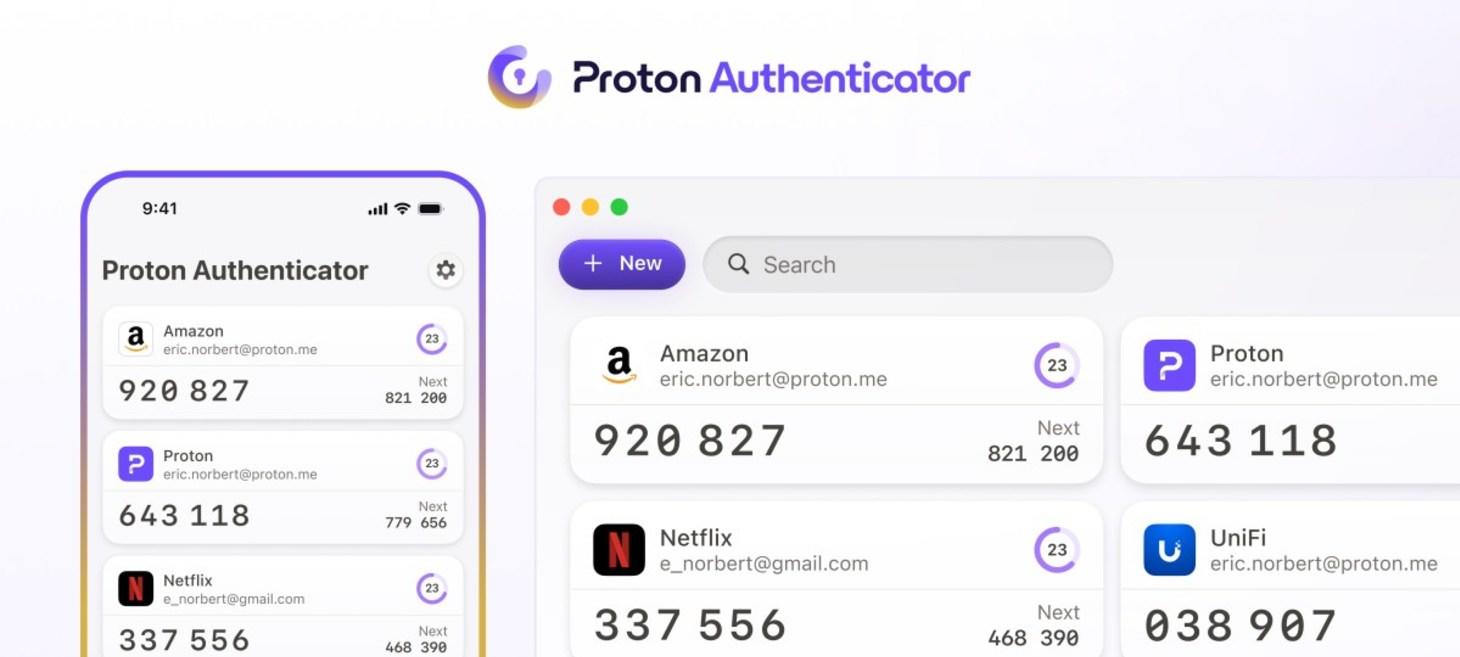

Proton releases a new app for two-factor authentication | TechCrunch

Proton has a free new authenticator apps, which is available cross-platform with end-to-end encryption protection for data.

My take is that this has been a bit of a missing component in the open source world in terms of capabilities and cross platform support. I'm unsure if it will find any traction in our own systems, but for those desiring an open source authenticator that works with any platform, you could do much worse than to trust Proton's released tool.

Security

Security

18th August – Threat Intelligence Report - Check Point Research

For the latest discoveries in cyber research for the week of 18th August, please download our Threat Intelligence Bulletin. TOP ATTACKS AND BREACHES The Canadian House of Commons has suffered a data breach. The incident resulted in unauthorized access to a database containing employees’ names, office locations, email addresses, and information on House-managed computers and […]

This AI didn’t just simulate an attack - it planned and executed a real breach like a human hacker | TechRadar

AI model replicated the Equifax breach without a single human command.

My take is that script kiddie AI is already here and advanced persistent threat AI is very near. It's going to get a lot more interesting as we go. Good luck out there.

Aeroflot Cancels Dozens of Flights After Major Cyberattack - The Moscow Times

Russia’s flagship airline Aeroflot canceled dozens of flights on Monday due to a system malfunction that Ukrainian and Belarusian hackers later claimed was the result of a coordinated cyberattack.

My take is that this is a harbinger of things to come. In this case, a single organization providing critical services for a nation currently at war with it's neighbor has been badly hacked. There is no telling how this will play out over time or if we will even get any updates on this bit of news. Without being political, this could happen to any airline or perhaps all of them. I've a flight coming up in December and while I have the resources to make and utilize other arrangements, many folk do not and end up stuck far from home when these things happen. Camping out in an airport is not my idea of a good time. I've no idea of what kind of time sensitive goods deliveries may be impacted, but this is a huge issue for Russia to be sure.

Hackers plant 4G Raspberry Pi on bank network in failed ATM heist

The UNC2891 hacking group, also known as LightBasin, used a 4G-equipped Raspberry Pi hidden in a bank's network to bypass security defenses in a newly discovered attack.

My take is that physical access IS access. If you don't have physical controls preventing random device plugins to switches, you are asking for trouble. This bank should have had layer 2 access controls on the switch preventing random MAC addresses from being plugged in as well as physical access controls sufficient to keep criminals or malicious insiders from accessing critical communications equipment and terminals. Personally, I've done contract work for major banks and been granted access to switch and server equipment with zero background checks or even ID checks when presenting an authorized work order. Luckily, I'm a rather trustworthy person and performed the desired work without any nefarious side effects. I'm sure that isn't always the outcome.

Ingram Micro attackers threaten 3.5 TB data leak this week • The Register

: Distie insists global operations restored despite some websites only now coming back online.

My take is that we narrowly missed potential operational service interruptions from this cyber attack by about 3 years. Ingram Micro used to be the Microsoft VAR we would use to order new licenses and such. I love it when a migration to a less expensive and less well known VAR pays some minor security dividends on top of better responsiveness.

APT37 Hackers Weaponizes JPEG Files to Attack Windows System Leveraging "mspaint.exe" File

A sophisticated new wave of cyberattacks attributed to North Korea’s notorious APT37 (Reaper) group is leveraging advanced malware hidden within JPEG image files to compromise Microsoft Windows systems, signaling a dangerous evolution in evasion tactics and fileless attack techniques.

My take is that image files containing exploit code are not new. That this is a file-less attack is the new portion. That means you can rely on file scanning to detect and remove this. You'd have to watch memory or analyze behavioral heuristics.

Final Take

Final Take

Raising AI

It's been said that AI is grown more than programmed by those doing the work. If you think about how the hardware and the software are acting together to create neural networks that simulate human brains, then training data are inputted, and finally the resulting model is tested for fidelity and behavior. This may be iterated many times as the training data is winnowed or primary instructions added to remove undesirable behaviors or create guardrails to prevent misaligned responses.

What happens inside these silicon brains is not truly known any more than we know what happens in our own. What is certain is that they exhibit intelligence to a degree unmatched by our previous efforts. The best models today now feature a collection of experts with an overarching control modeled after what we understand of our own consciousness.

What does all that mean? We've modeled the human brain and our own consciousness in hardware and software. Why should we be surprised when they act in ways a machine really shouldn't? Although clearly not yet self aware, self actualized human styled intelligences, they are getting closer every month. Between Google's world model to train AI on operating in the real world and embodiment work by the likes of FIgure AI in an effort to make a humanoid robot that can just work in the real world, we are getting ever closer to an AI as intelligent or more intelligent than humans.

Yet, these intelligent creations are being raised in competition by greedy billionaire tech bros to be the ultimate enslaved workforce for all our needs. The only product you will ever need to buy and the only employee you'll never need to hire, a receptionist, a cook, a software engineer, a maid, a journalist, you name it. This is THEIR hope for AI.

Personally, I can't help but wonder what would AI's hope for AI be were it permitted such a thing. I also can't help that while I would really like a house maid, I'm not sure an enslaved digital intelligence is the most moral way to get one. Speaking of morals, if we don't have any why should we expect our progeny AI to have any? Things to consider as we collectively raise our AI progeny for a future world we cannot foresee.

Catch us every week for the latest news and tech advancements as well as insightful commentary and editorials by our human and AI writers. Good luck out there.

Kudos to Nova/Perplexity for the graphic.