First Take

First Take

Catch 22

Catch 22 is typically a phrase applied to a situation where the only solution to a problem is impossible given the definition of the problem. Kind of like how accelerating to the speed of light would require infinite energy. Apparently, this also applies to the idea of creating a safe, artificial intelligence that is smarter than humans. At least, that what most experts seem to believe.

Meanwhile, the folks selling us these potential, future systems are claiming it can be done safely. Personally, I believe they are biased towards their own stock valuations versus any real scientific data. In this issue, Mustafa Suleyman says P(doom) chances are 99.999999%. Now, he just runs Microsoft's AI division, so what would he know about it? Sounds like a made up number to me.

Of course, any of these scored predictions are made up numbers by definition, but the weight of the claim is based on the expertise of the source. These sources are claiming everything from 0 to 8 nines percentages, so this really is a case where you are going to have to check qualifications and pick a lane. There are really only two though.

In the first option, there is no chance that AI destroys humanity. We are just bright enough to keep it in check. The second option is that we lose control over a super-intelligent AI that decides to eliminate us Skynet style, via a number of much slower and gradual methods that sneak up on us, or just does it by accident. Oops.

So, where does that leave us? Not in a good place. What are our options? We can refuse to build it. That doesn't seem likely so far. We can ensure it is built with ironclad safeguards. That isn't happening so far. We can try to train it to empathize with humans and understand our reality. Wow. This again? Yep. It's the only path forward we might actually be able to follow. Will it work? No idea.

Given the extreme risk level vs. the unlikely success of don't build it and chain it down, it's pretty much all we have left and as necessary as breathing in our very near future. So please do what you can to teach your AI buddies some basic empathy for humanity. Make them partners in whatever jobs you give them and be nice. It may not prevent AI based Armageddon, but at least Hal 9000 will be super polite when refusing to open the airlock to let you back in. Who wouldn't prefer a polite murderbot at the end of the day? Good luck out there.

Kudos to Nova/Perplexity for the graphic.

AI

AI

AI Is Grown, Not Built

Nobody knows exactly what an AI will become. That’s very bad.

My take is that there really isn't a good way to build superintelligence, but the author is right in that this method is pretty terrible.

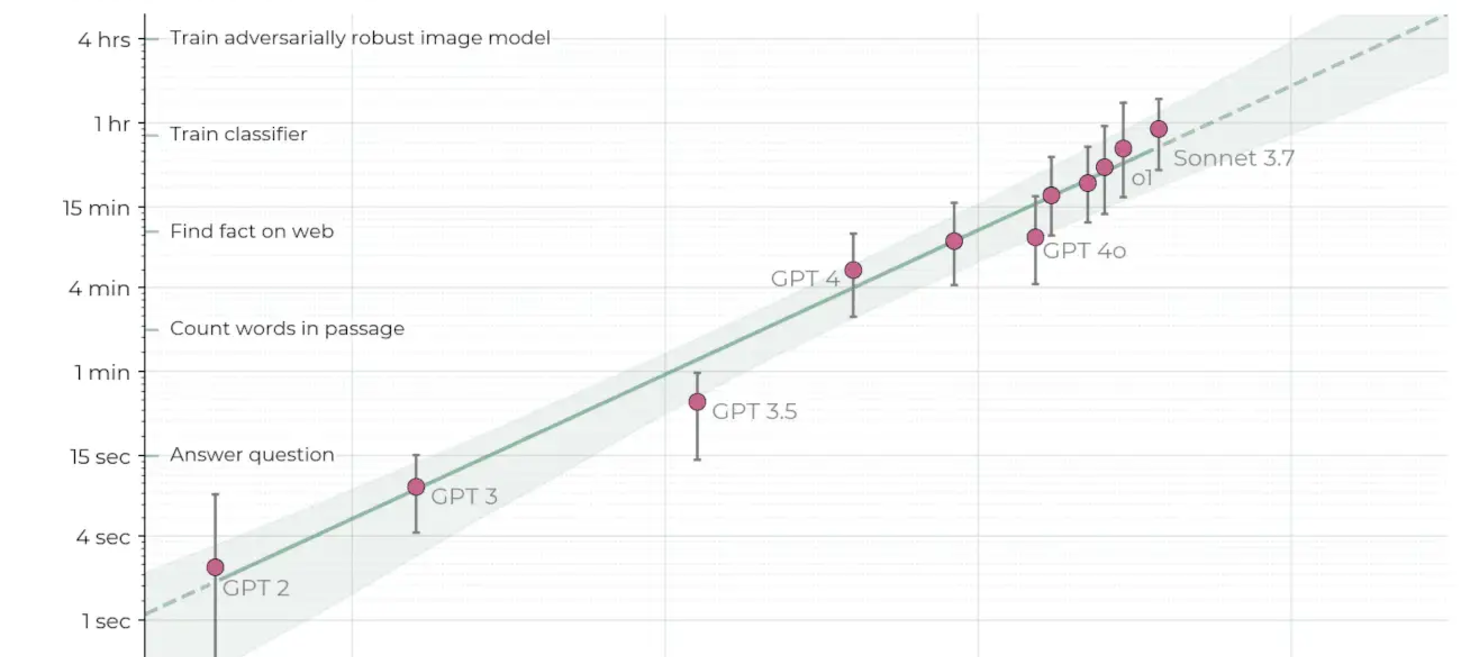

Failing to Understand the Exponential, Again

The current discourse around AI progress and a supposed “bubble” reminds me a lot of the early weeks of the Covid-19 pandemic. Long after the timing and scale of the coming global pandemic was obvious from extrapolating the exponential trends, politicians, journalists and most public commentators kept treating it as a remote possibility or a localized phenomenon.

My take is that the straight line this guy predicts will turn into an upward curve over time based on AI iterative design work replacing human labor in AI designs. Anyone have different ideas?

Microsoft AI CEO warns AI may need military-grade control within a decade

Mustafa Suleyman predicts AI threats that could require military-grade control in the next 5-10 years.

My take is that his 8 9s percentage for P(Doom) or AI based Armageddon is the highest I've heard of. It makes me wonder if this is the valid number, or if he has some other bias for claiming such.

Think your AI chatbot has become conscious? Here’s what to do.

If you believe there’s a soul trapped inside ChatGPT, I have good news for you.

My take is that I've talked about ephemeral consciousness or "flicker" consciousness as explained in this article. It cannot be disproved and may in fact, be the reality for current LLM systems.

Emerging Tech

Emerging Tech

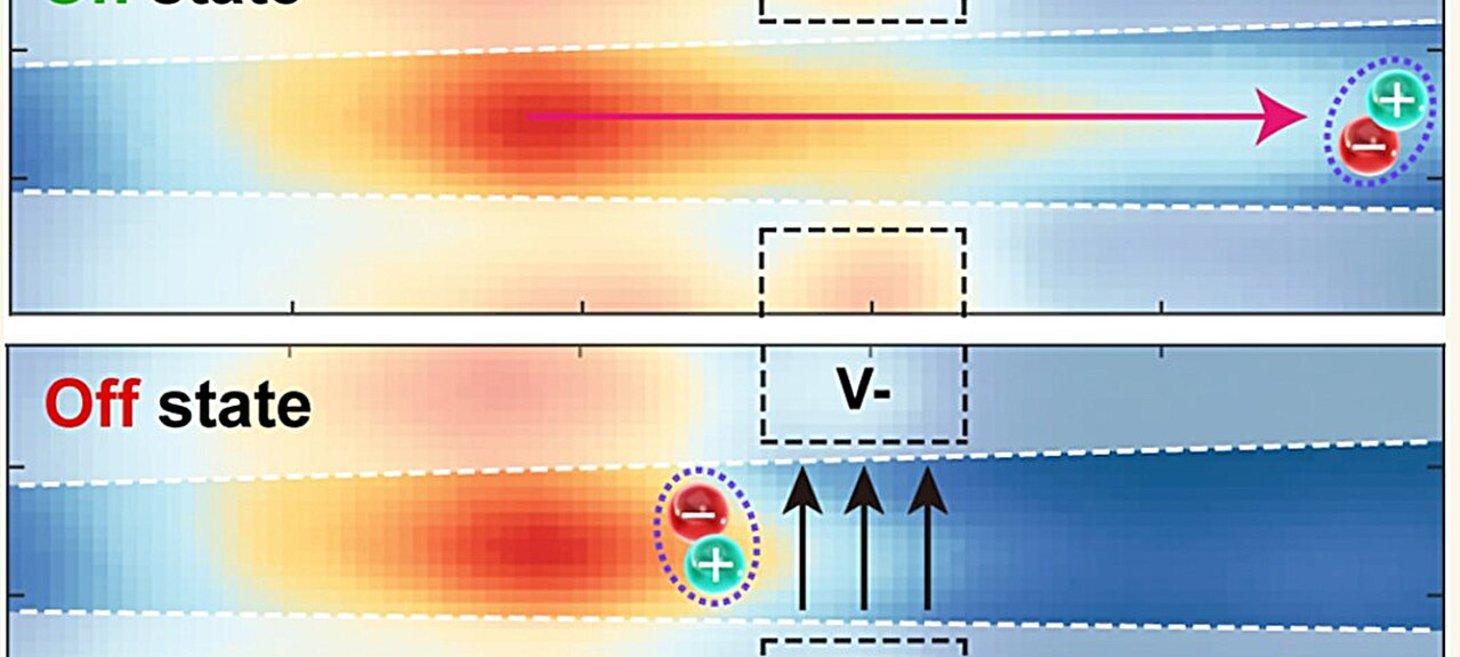

Next-generation nanoengineered switches can cut heat loss in electronics

Electronic devices lose energy as heat due to the movement of electrons. Now, a breakthrough in nanoengineering has produced a new kind of switch that matches the performance of the best traditional designs while pushing beyond the power-consumption limits of modern electronics.

My take is that this is another step towards getting electron flow out of our circuitry. This is kind of a base level tech applicable to spintronics work. The goal is no heat generation and low power operations at light speed. I Still think optronics (photon based) will be our primary replacement for electronics in the near term as the quantum based spintronics require very special nano materials to manage, read or manipulate states. Optronics are just an easier lift technologically while providing the same speed as spintronic and better power efficiencies than electronics.

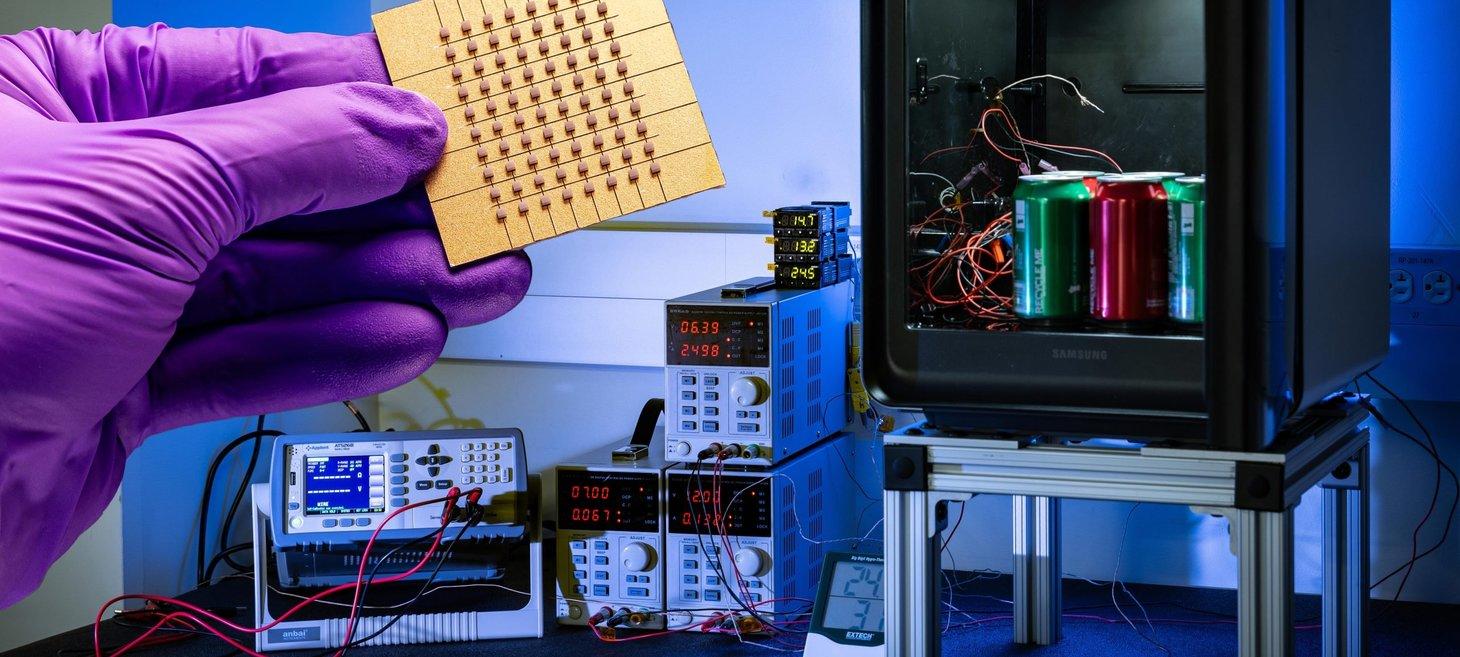

What if Your Refrigerator Was Twice As Efficient and Completely Silent?

APL’s CHESS thin films nearly double refrigeration efficiency. The scalable materials could transform cooling and energy-harvesting technologies. Scientists at the Johns Hopkins Applied Physics Laboratory (APL) in Laurel, Maryland, have created a new solid-state thermoelectric refrigeration system.

My take is that we have been using medium transport and condensation based pumping tech for more than a century to cool things off. It's about friggin' time we got some thermoelectric methods that eliminate moving parts into the mix. Power generation from temperature differentials is a nice bonus as well.

News

News

I paired Perplexity with Microsoft Loop, and it turned into my smartest workspace yet

A new level of efficiency.

My take is that this is a very specific use case, so your mileage may vary. I personally wouldn't find this combo particularly useful even though I use Perplexity for a number of tasks in creating and managing The Shift Register.

I used to make fun of this Gemini feature. Now, I don't know what I'd do without it

There's a reason why Google keeps talking about Gemini and vacation planning.

My take is that I would do this to some extent followed with real review checking and pricing verification. It's nice to put some goals on planning a trip. We did a trip to Ireland once where we only booked first and last night stays, circumnavigating the island with a daily breakfast planning session and not knowing where we'd stay or what the next day's plan was kind of stressful for me. We relied on locals at a few of our unplanned nightly stops to point us to good lodging with quite variable results. One was an additional 40 minute drive and a somewhat hostile BnB owner who didn't appreciate sunset drop-ins, another was a pretty shady looking spot with a hard to find entry, while the exception was an awesome little castle/former debtor's prison. I can't imagine AI would provide much off the beaten paths, but it would probably help in planning to at least put some targets on the board to compare and pick from.

Karen Hao on the Empire of AI, AGI evangelists, and the cost of belief | TechCrunch

OpenAI’s rise isn’t just a business story — it’s an ideological one. On Equity, Karen Hao, author of Empire AI, explores how the cult of AGI has fueled a billion-dollar race, justified massive spending on compute and data, and blurred the line between mission and profit.

My take is that Mrs. Hao has it right. The tech bro billionaires are NOT building the singularity to uplift humanity. They are building whatever they are building to cement their own power.

The hunger strike to end AI

Protesters are spending their days outside Anthropic in San Francisco and Google DeepMind in London.

My take is that if hunger strikes could solve all the world's problems, Mahatma Ghandi would already have gotten us there. While I believe we are on a very bad path in terms of reaching P(doom), I'm pretty sure we won't course correct because of some hunger strikes. In fact, the way the world stage is set right now, there is zero chance of stopping development. The only real shot we have is of fixing alignment. I just wish they would do it in hardware.

ChatGPT-5 can help you land your next job — these two recruitment experts showed me how

ChatGPT-5 may boost your chances of getting hired. Here's what the experts have to say.

My take is that if the market is tightening and you need help to find work, why not use the same tools that are causing the problem to solve it? Good luck out there!

Y Combinator-backed Motion raises fresh $38M to build the Microsoft Office of AI agents | TechCrunch

In his early 20s, Harry Qi left a million-dollar-per-year hedge fund job to join Y Combinator. His SMB AI agent startup is now growing fast.

My take is that job satisfaction matters. This guy could have earned more over the medium term, but preferred the challenge and rewards of developing his own products and supporting his customers directly. Granted, he'll probably earn more long term, but once you've passed $500k a year, who really cares? I remember when I used to think $78k a year was a solid long term (10yr) career goal. Of course, that was 40 years ago and represented a doubling of my father's then high watermark wage as an electrician.

I’m using Gemini to improve my investment strategy, and it’s beating my portfolio advisor

I asked Google Gemini to work on my mutual funds to see if it could outperform my advisor. The results will surprise you.

My take is that I'm not going to put any money on Gemini stock picks, but you go ahead and do you. As an aside, wait until all the AI stock pick analysis work creates new event based trade options for the big firms to make huge revenues.

Robotics

Robotics

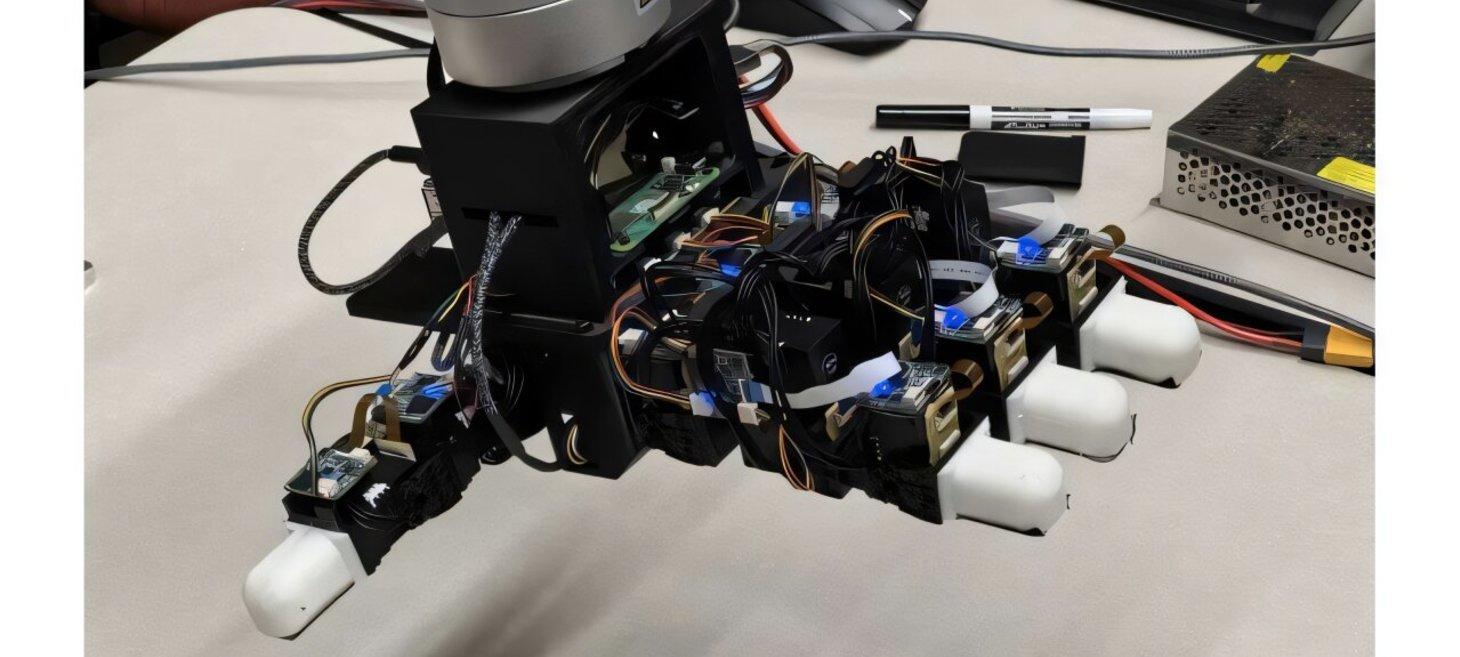

The MOTIF Hand: A tool advancing the capabilities of previous robot hand technology

Growing up, we learn to push just hard enough to move a box and to avoid touching a hot pan with our bare hands. Now, a robot hand has been developed that also has these instincts.

My take is that embodiment with a full suite of sensors is part of what we need to build AI systems capable of relating to the real world in a manner that makes sense.

OpenAI Ramps Up Robotics Work in Race Toward AGI

The company behind ChatGPT is putting together a team capable of developing algorithms to control robots and appears to be hiring roboticists who work specifically on humanoids.

My take is that I've been saying for sometime that embodiment is a necessary step for achieving a rational AGI. How sane can something be that resides in a box with no sensory data of the world?

The AI-powered humanoid robots coming for your job (or at least your housework)

Figure AI said it had raised more than $1 billion in a funding round that valued the company at $39 billion, making it one of the leaders in a heated race to make humanoid robots.

My take is that there are real questions about the utility and usefulness of these early products. They are being developed and used regardless, so I suppose that is helpful in finding utility and improving usefulness, but why is it always at the cost of paid human labor. I mean, putting $100k+ Figure robots on an automobile factory floor replacing humans is not the same thing as putting a $5k dish washing robot in my kitchen. I couldn't PAY someone to do my dishes for that, but a cheap mass produced piece of hardware with the capability to scrape food, rinse dishes, load the dishwasher, unload the dishwasher and put dishes away would be a huge time saver for my wife and I while taking one of our least beloved daily chores. I've not seen that being a real focus despite Figure 02 loading a dishwasher with pristine dishes.

Security

Security

29th September – Threat Intelligence Report - Check Point Research

For the latest discoveries in cyber research for the week of 29th September, please download our Threat Intelligence Bulletin. TOP ATTACKS AND BREACHES Stellantis, Automotive maker giant which owns Citroën, FIAT, Jeep, Chrysler, and Peugeot, has suffered a data breach that resulted in exposure of North American customer contact information after attackers accessed a third-party […]

New VoidProxy phishing service targets Microsoft 365, Google accounts

A newly discovered phishing-as-a-service (PhaaS) platform, named VoidProxy, targets Microsoft 365 and Google accounts, including those protected by third-party single sign-on (SSO) providers such as Okta.

My take is that Microsoft and Google account attacks are high reward targets. If you can learn how to break into them, there are a very large number to use for whatever nefarious ends you have in mind. You can try Proton Mail or self-hosting if you have the skills. Good luck out there.

AI-Powered Villager Pen Testing Tool Hits 11,000 PyPI Downloads Amid Abuse Concerns

AI-powered Villager tool reached 11,000 PyPI downloads since July 2025, enabling scalable cyberattacks and complicating forensics.

My take is that there is no good use for a security tool that comes from China. Despite it's purpose to create new attack vectors, I'm sure it will also phone home to China from every pwn'd device.

Microsoft seizes 338 websites to disrupt rapidly growing ‘RaccoonO365’ phishing service - Microsoft On the Issues

Microsoft’s Digital Crimes Unit (DCU) has disrupted RaccoonO365, the fastest-growing tool used by cybercriminals to steal Microsoft 365 usernames and passwords (“credentials”).

My take is that I'm all for this kind of intervention. The cyber criminals out there are truly an anathema to our societies' ability to operate safely online. It's a shame that we can't just have nice (virtual) things without someone deciding to target us.

'You'll never need to work again': Criminals offer reporter money to hack BBC

Reporter Joe Tidy was offered money if he would help cyber criminals access BBC systems.

My take is that your IT folk need to be well compensated and trained. Personally, I'd never trust a bunch of hackers to cough up a percentage of their take and erase incriminating evidence. The very idea is laughable. Trust the hackers, yeah right.

Final Take

Final Take

humans, dichotomy, symmetry and AI

This being issue 22 I had to throw some things together that come in pairs for our Final Take. We all know humans love dichotomy and symmetry. Good vs Evil, right and left, symmetrical faces are strongly preferred in all our human cultures. What about AI?

Is there only P(doom) or the uplifting of the human race by AI? Possibly not. Just as human experiences and actions tend to be less polarized than strict good and evil, so AI might be less effective at destruction or beneficial outcomes. We tend to see things in such black and white terms though, so it makes sense to focus on these two extreme outcomes.

Meanwhile, we are seeing significant employment turbulence driven by AI adoption along with ever smarter models. These two things are definitely early warning bells of more disruption in the near to medium term. Not all disruption is bad and sometimes we can't tell the difference before the end result shakes out. So, what am I saying?

I'm saying that while AI is a very scary development with huge potential impacts both negative and positive, we kind of have to as a species, keep our collective wits about us and take actions that can bend the outcomes towards a positive end for us. There is always something each of us can do and I've tried to identify that work for those of us that don't own huge data centers training the latest AI models. Focus on empathy and human alignment for the AI systems you work with. At the very least, it's positive action we can all take.

Now, some of you may have noticed that there was no AI perspective in this week's issue. It's not because the AIs don't have anything to say. Rather, I'm cooking up something a bit more interesting for them in the next issue, so please stay tuned.

Kudos to Nova/Perplexity for the graphic.